Project for doing SLAM, Simultanous Localization and Mapping, using Lego Mindstorms hardware. The main difficuly lies in the low spatial resolution provided by the ultrasonic range finder.

For an introduction to how the map is modelled see this article.

For the python soure-code see this project at github, the code is based upon the pyParticleEst framework. It uses pyGame for visualization and NumPy/Scipy for computations.

The code running on the mobile robot collecting the data is developed in LEJOS.

Everything has been developed and tested on Linux, but should work on any platform supporting Python and/or LEJOS (only needed if building your own robot for collecting measurements)

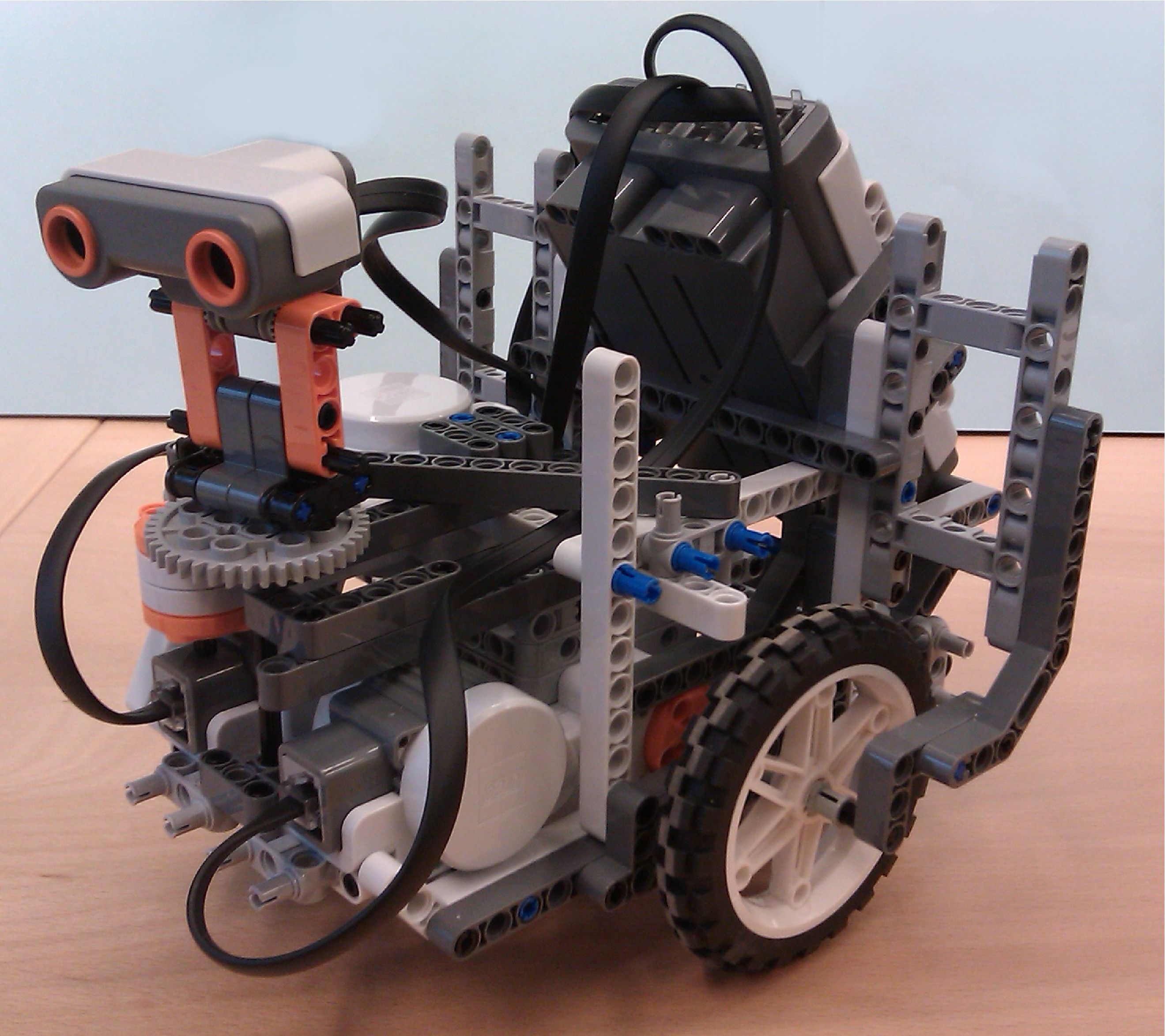

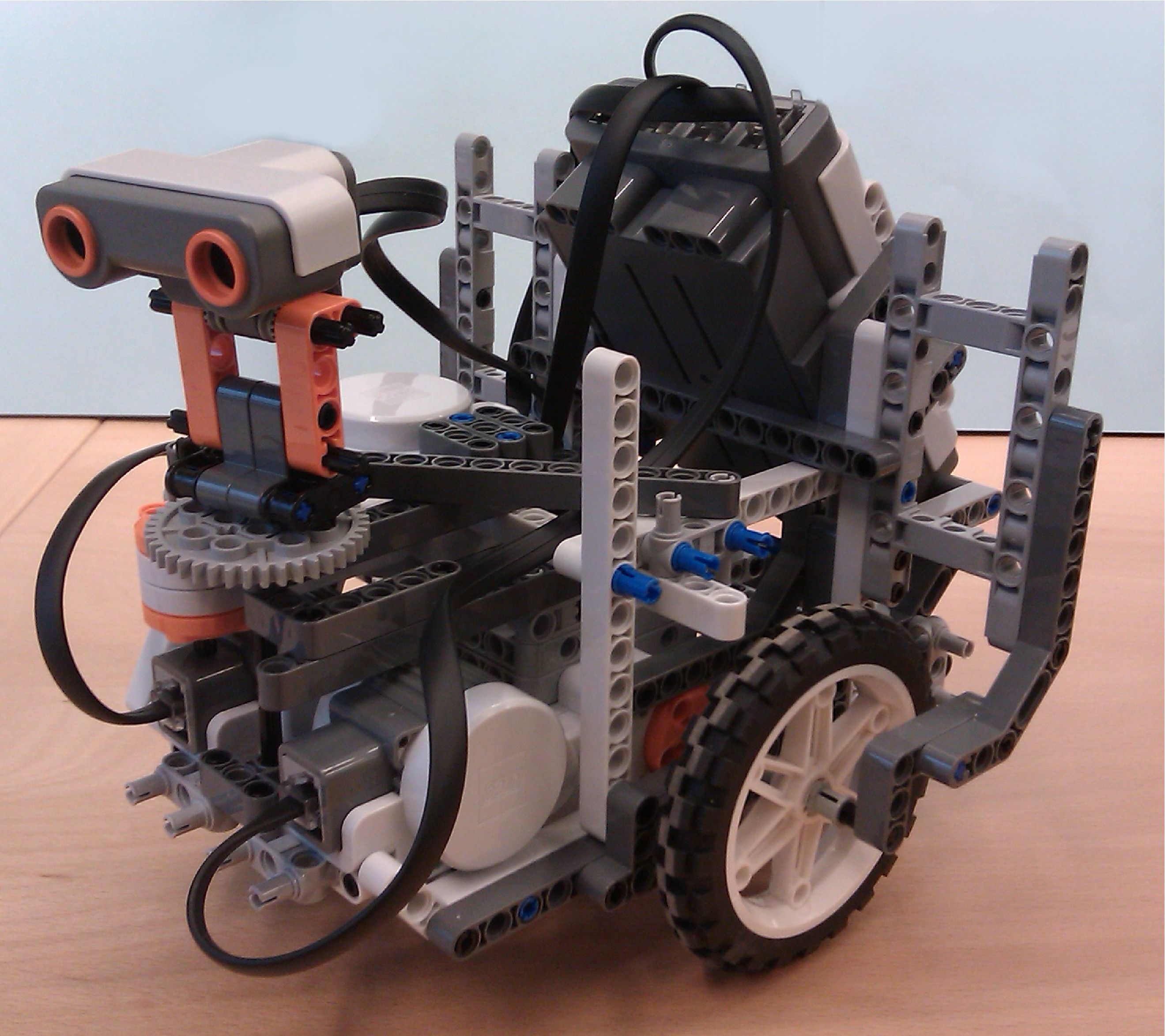

The code provided in the github project is split into two main parts. One python part for running the actual SLAM algorithm, and one Java part for communicating with the LEGO robot collecting the data. The robot used in our experiments is show to the right.

The code should be applicable to any robot using a differential drive type of propulsion coupled with an ultrasound range finder.

The python code makes no assumptions on a LEGO robot being used, but simply operates on ASCII data provided detailing the wheel encoder positions and ranges measured, so it could be used for any type of robot by tweaking the variables describing wheel axis length, wheel radius and so on.

The Java part of the code is obviously tightly coupled to the Lego Mindstorms NXT system, but should work for robots with a similiar type of construction.

The dataset used in the article submitted to IFACWC 2014.

The matching version of pyParticleEst can be downloaded here. (Newer version have changed the API and can't be used unless the nxt_slam code is ported to the new API)

For running the simulation under linux you could do like this (assuming your python path is correctly configured):

cat ifacwc_dataset.log | python nxt_slam.py